Review:

The Box, by Marc Levinson

| Publisher: |

Princeton University Press |

| Copyright: |

2006, 2008 |

| Printing: |

2008 |

| ISBN: |

0-691-13640-8 |

| Format: |

Trade paperback |

| Pages: |

278 |

The shipping container as we know it is only about 65 years old. Shipping

things in containers is obviously much older; we've been doing that for

longer than we've had ships. But the standardized metal box, set on a

rail car or loaded with hundreds of its indistinguishable siblings into an

enormous, specially-designed cargo ship, became economically significant

only recently. Today it is one of the oft-overlooked foundations of

global supply chains. The startlingly low cost of container shipping is

part of why so much of what US consumers buy comes from Asia, and why most

complex machinery is assembled in multiple countries from parts gathered

from a dizzying variety of sources.

Marc Levinson's

The Box is a history of container shipping, from

its (arguable) beginnings in the trailer bodies loaded on Pan-Atlantic

Steamship Corporation's

Ideal-X in 1956 to just-in-time

international supply chains in the 2000s. It's a popular history that

falls on the academic side, with a full index and 60 pages of citations

and other notes. (Per my normal convention, those pages aren't included

in the sidebar page count.)

The Box is organized mostly

chronologically, but Levinson takes extended detours into labor relations

and container standardization at the appropriate points in the timeline.

The book is very US-centric. Asian, European, and Australian shipping is

discussed mostly in relation to trade with the US, and Africa is barely

mentioned. I don't have the background to know whether this is

historically correct for container shipping or is an artifact of

Levinson's focus.

Many single-item popular histories focus on something that involves

obvious technological innovation (

paint

pigments) or deep cultural resonance (

salt)

or at least entertaining quirkiness (

punctuation marks,

resignation letters).

Shipping containers are important but simple and boring. The least

interesting chapter in

The Box covers container standardization, in

which a whole bunch of people had boring meetings, wrote some things done,

discovered many of the things they wrote down were dumb, wrote more things

down, met with different people to have more meetings, published a

standard that partly reflected the fixations of that one guy who is always

involved in standards discussions, and then saw that standard be promptly

ignored by the major market players.

You may be wondering if that describes the whole book. It doesn't, but

not because of the shipping containers.

The Box is interesting

because the process of economic change is interesting, and container

shipping is almost entirely about business processes rather than

technology.

Levinson starts the substance of the book with a description of shipping

before standardized containers. This was the most effective, and probably

the most informative, chapter. Beyond some vague ideas picked up via

cultural osmosis, I had no idea how cargo shipping worked. Levinson gives

the reader a memorable feel for the sheer amount of physical labor

involved in loading and unloading a ship with mixed cargo (what's called

"breakbulk" cargo to distinguish it from bulk cargo like coal or wheat

that fills an entire hold). It's not just the effort of hauling barrels,

bales, or boxes with cranes or raw muscle power, although that is

significant. It's also the need to touch every piece of cargo to move it,

inventory it, warehouse it, and then load it on a truck or train.

The idea of container shipping is widely attributed, including by

Levinson, to Malcom McLean, a trucking magnate who became obsessed with

the idea of what we now call intermodal transport: using the same

container for goods on ships, railroads, and trucks so that the contents

don't have to be unpacked and repacked at each transfer point. Levinson

uses his career as an anchor for the story, from his acquisition of

Pan-American Steamship Corporation to pursue his original idea (backed by

private equity and debt, in a very modern twist), through his years

running Sea-Land as the first successful major container shipper, and

culminating in his disastrous attempted return to shipping by acquiring

United States Lines.

I am dubious of Great Man narratives in history books, and I think

Levinson may be overselling McLean's role. Container shipping was an

obvious idea that the industry had been talking about for decades. Even

Levinson admits that, despite a few gestures at giving McLean central

credit. Everyone involved in shipping understood that cargo handling was

the most expensive and time-consuming part, and that if one could minimize

cargo handling at the docks by loading and unloading full containers that

didn't have to be opened, shipping costs would be much lower (and profits

higher). The idea wasn't the hard part. McLean was the first person to

pull it off at scale, thanks to some audacious economic risks and a

willingness to throw sharp elbows and play politics, but it seems likely

that someone else would have played that role if McLean hadn't existed.

Container shipping didn't happen earlier because achieving that cost

savings required a huge expenditure of capital and a major disruption of a

transportation industry that wasn't interested in being disrupted. The

ships had to be remodeled and eventually replaced; manufacturing had to

change; railroad and trucking in theory had to change (in practice,

intermodal transport; McLean's obsession, didn't happen at scale until

much later); pricing had to be entirely reworked; logistical tracking of

goods had to be done much differently; and significant amounts of

extremely expensive equipment to load and unload heavy containers had to

be designed, built, and installed. McLean's efforts proved the cost

savings was real and compelling, but it still took two decades before the

shipping industry reconstructed itself around containers.

That interim period is where this history becomes a labor story, and

that's where Levinson's biases become somewhat distracting.

In the United States, loading and unloading of cargo ships was done by

unionized longshoremen through a bizarre but complex and long-standing

system of contract hiring. The cost savings of container shipping comes

almost completely from the loss of work for longshoremen. It's a classic

replacement of labor with capital; the work done by gangs of twenty or

more longshoreman is instead done by a single crane operator at much

higher speed and efficiency. The longshoreman unions therefore opposed

containerization and launched numerous strikes and other labor actions to

delay use of containers, force continued hiring that containers made

unnecessary, or win buyouts and payoffs for current longshoremen.

Levinson is trying to write a neutral history and occasionally shows some

sympathy for longshoremen, but they still get the Luddite treatment in

this book: the doomed reactionaries holding back progress. Longshoremen

had a vigorous and powerful union that won better working conditions

structured in ways that look absurd to outsiders, such as requiring that

ships hire twice as many men as necessary so that half of them could get

paid while not working. The unions also had a reputation for corruption

that Levinson stresses constantly, and theft of breakbulk cargo during

loading and warehousing was common. One of the interesting selling points

for containers was that lossage from theft during shipping apparently

decreased dramatically.

It's obvious that the surface demand of the longshoremen unions, that

either containers not be used or that just as many manual laborers be

hired for container shipping as for earlier breakbulk shipping, was

impossible, and that the profession as it existed in the 1950s was doomed.

But beneath those facts, and the smoke screen of Levinson's obvious

distaste for their unions, is a real question about what society owes

workers whose jobs are eliminated by major shifts in business practices.

That question of fairness becomes more pointed when one realizes that this

shift was

massively subsidized by US federal and local governments.

McLean's Sea-Land benefited from direct government funding and subsidized

navy surplus ships, massive port construction in New Jersey with public

funds, and a sweetheart logistics contract from the US military to supply

troops fighting the Vietnam War that was so generous that the return

voyage was free and every container Sea-Land picked up from Japanese ports

was pure profit. The US shipping industry was heavily

government-supported, particularly in the early days when the labor

conflicts were starting.

Levinson notes all of this, but never draws the contrast between the

massive support for shipping corporations and the complete lack of formal

support for longshoremen. There are hard ethical questions about what

society owes displaced workers even in a pure capitalist industry

transformation, and this was very far from pure capitalism. The US

government bankrolled large parts of the growth of container shipping, but

the only way that longshoremen could get part of that money was through

strikes to force payouts from private shipping companies.

There are interesting questions of social and ethical history here that

would require careful disentangling of the tendency of any group to oppose

disruptive change and fairness questions of who gets government support

and who doesn't. They will have to wait for another book; Levinson never

mentions them.

There were some things about this book that annoyed me, but overall it's a

solid work of popular history and deserves its fame. Levinson's account

is easy to follow, specific without being tedious, and backed by

voluminous notes. It's not the most compelling story on its own merits;

you have to have some interest in logistics and economics to justify

reading the entire saga. But it's the sort of history that gives one a

sense of the fractal complexity of any area of human endeavor, and I

usually find those worth reading.

Recommended if you like this sort of thing.

Rating: 7 out of 10

Happy 2024!

DAIS have continued their programme of posthumous Coil remasters and re-issues.

Constant Shallowness Leads To

Evil

was remastered by Josh Bonati in 2021 and re-released in 2022 in a dizzying

array of different packaging variants. The original releases in 2000 had barely

any artwork, and given that void I think Nathaniel Young has done a great job

of creating something compelling.

A limited number of the original re-issue have special lenticular covers, although

these were not sold by any distributors outside the US. I tried to find a copy on

my trip to Portland in 2022, to no avail.

Last year DAIS followed Constant with Queens Of The Circulating

Library,

same deal: limited lenticular covers, US only.

Both are also available digital-only, e.g. on Bandcamp:

Constant ,

Queens .

The original, pre-remastered releases have been freely available on archive.org for a long time:

Constant ,

Queens

Both of these releases feel to me that they were made available by the group

somewhat as an afterthought, having been produced primarily as part of their

live efforts. (I'm speculating freely here, it might not be true). Live takes

of some of this material exist in the form of Coil Presents Time

Machines, which

has not (yet) been reissued. In my opinion this is a really compelling

recording. I vividly remember listening to this whilst trying to get an hour's

rest in a hotel somewhere on a work trip. It took me to some strange places!

I'll leave you from one of my favourite moments from "Colour Sound Oblivion",

Coil's video collection of live backdrops. When this was performed live it

was also called "Constant Shallowness Leads To Evil", although it's distinct

from the material on the LP:

also available on

archive.org. A version of

this Constant made it onto a Russian live bootleg, which is available on

Spotify

and Bandcamp

complete with some John Balance banter:

Happy 2024!

DAIS have continued their programme of posthumous Coil remasters and re-issues.

Constant Shallowness Leads To

Evil

was remastered by Josh Bonati in 2021 and re-released in 2022 in a dizzying

array of different packaging variants. The original releases in 2000 had barely

any artwork, and given that void I think Nathaniel Young has done a great job

of creating something compelling.

A limited number of the original re-issue have special lenticular covers, although

these were not sold by any distributors outside the US. I tried to find a copy on

my trip to Portland in 2022, to no avail.

Last year DAIS followed Constant with Queens Of The Circulating

Library,

same deal: limited lenticular covers, US only.

Both are also available digital-only, e.g. on Bandcamp:

Constant ,

Queens .

The original, pre-remastered releases have been freely available on archive.org for a long time:

Constant ,

Queens

Both of these releases feel to me that they were made available by the group

somewhat as an afterthought, having been produced primarily as part of their

live efforts. (I'm speculating freely here, it might not be true). Live takes

of some of this material exist in the form of Coil Presents Time

Machines, which

has not (yet) been reissued. In my opinion this is a really compelling

recording. I vividly remember listening to this whilst trying to get an hour's

rest in a hotel somewhere on a work trip. It took me to some strange places!

I'll leave you from one of my favourite moments from "Colour Sound Oblivion",

Coil's video collection of live backdrops. When this was performed live it

was also called "Constant Shallowness Leads To Evil", although it's distinct

from the material on the LP:

also available on

archive.org. A version of

this Constant made it onto a Russian live bootleg, which is available on

Spotify

and Bandcamp

complete with some John Balance banter:

Like each month, have a look at the work funded by

Like each month, have a look at the work funded by  I think while developing Wayland-as-an-ecosystem we are now entrenched into narrow concepts of how a desktop should work. While discussing Wayland protocol additions, a lot of concepts clash, people from different desktops with different design philosophies debate the merits of those over and over again never reaching any conclusion (just as you will never get an answer out of humans whether sushi or pizza is the clearly superior food, or whether CSD or SSD is better). Some people want to use Wayland as a vehicle to force applications to submit to their desktop s design philosophies, others prefer the smallest and leanest protocol possible, other developers want the most elegant behavior possible. To be clear, I think those are all very valid approaches.

But this also creates problems: By switching to Wayland compositors, we are already forcing a lot of porting work onto toolkit developers and application developers. This is annoying, but just work that has to be done. It becomes frustrating though if Wayland provides toolkits with absolutely no way to reach their goal in any reasonable way. For Nate s Photoshop analogy: Of course Linux does not break Photoshop, it is Adobe s responsibility to port it. But what if Linux was missing a crucial syscall that Photoshop needed for proper functionality and Adobe couldn t port it without that? In that case it becomes much less clear on who is to blame for Photoshop not being available.

A lot of Wayland protocol work is focused on the environment and design, while applications and work to port them often is considered less. I think this happens because the overlap between application developers and developers of the desktop environments is not necessarily large, and the overlap with people willing to engage with Wayland upstream is even smaller. The combination of Windows developers porting apps to Linux and having involvement with toolkits or Wayland is pretty much nonexistent. So they have less of a voice.

I think while developing Wayland-as-an-ecosystem we are now entrenched into narrow concepts of how a desktop should work. While discussing Wayland protocol additions, a lot of concepts clash, people from different desktops with different design philosophies debate the merits of those over and over again never reaching any conclusion (just as you will never get an answer out of humans whether sushi or pizza is the clearly superior food, or whether CSD or SSD is better). Some people want to use Wayland as a vehicle to force applications to submit to their desktop s design philosophies, others prefer the smallest and leanest protocol possible, other developers want the most elegant behavior possible. To be clear, I think those are all very valid approaches.

But this also creates problems: By switching to Wayland compositors, we are already forcing a lot of porting work onto toolkit developers and application developers. This is annoying, but just work that has to be done. It becomes frustrating though if Wayland provides toolkits with absolutely no way to reach their goal in any reasonable way. For Nate s Photoshop analogy: Of course Linux does not break Photoshop, it is Adobe s responsibility to port it. But what if Linux was missing a crucial syscall that Photoshop needed for proper functionality and Adobe couldn t port it without that? In that case it becomes much less clear on who is to blame for Photoshop not being available.

A lot of Wayland protocol work is focused on the environment and design, while applications and work to port them often is considered less. I think this happens because the overlap between application developers and developers of the desktop environments is not necessarily large, and the overlap with people willing to engage with Wayland upstream is even smaller. The combination of Windows developers porting apps to Linux and having involvement with toolkits or Wayland is pretty much nonexistent. So they have less of a voice.

I will also bring my two protocol MRs to their conclusion for sure, because as application developers we need clarity on what the platform (either all desktops or even just a few) supports and will or will not support in future. And the only way to get something good done is by contribution and friendly discussion.

I will also bring my two protocol MRs to their conclusion for sure, because as application developers we need clarity on what the platform (either all desktops or even just a few) supports and will or will not support in future. And the only way to get something good done is by contribution and friendly discussion.

This year was hard from a personal and work point of view, which impacted the amount of Free Software bits I ended up doing - even when I had the time I often wasn t in the right head space to make progress on things. However writing this annual recap up has been a useful exercise, as I achieved more than I realised. For previous years see

This year was hard from a personal and work point of view, which impacted the amount of Free Software bits I ended up doing - even when I had the time I often wasn t in the right head space to make progress on things. However writing this annual recap up has been a useful exercise, as I achieved more than I realised. For previous years see  One of the knitting projects I m working on is a big bottom-up

triangular shawl in less-than-fingering weight yarn (NM 1/15): it feels

like a cloud should by all rights feel, and I have good expectations out

of it, but it s taking forever and a day.

And then one day last spring I started thinking in the general direction

of top-down shawls, and decided I couldn t wait until I had finished the

first one to see if I could design one.

For my first attempt I used an odd ball of 50% wool 50% plastic I had in

my stash and worked it on 12 mm tree trunks, and I quickly made

something between a scarf and a shawl that got some use during the

summer thunderstorms when temperatures got a bit lower, but not really

cold. I was happy with the shape, not with the exact position of the

increases, but I had ideas for improvements, so I just had to try

another time.

Digging through the stash I found four balls of Drops Alpaca in two

shades of grey: I had bought it with the intent to test its durability

in somewhat more demanding situations (such as gloves or even socks),

but then the LYS

One of the knitting projects I m working on is a big bottom-up

triangular shawl in less-than-fingering weight yarn (NM 1/15): it feels

like a cloud should by all rights feel, and I have good expectations out

of it, but it s taking forever and a day.

And then one day last spring I started thinking in the general direction

of top-down shawls, and decided I couldn t wait until I had finished the

first one to see if I could design one.

For my first attempt I used an odd ball of 50% wool 50% plastic I had in

my stash and worked it on 12 mm tree trunks, and I quickly made

something between a scarf and a shawl that got some use during the

summer thunderstorms when temperatures got a bit lower, but not really

cold. I was happy with the shape, not with the exact position of the

increases, but I had ideas for improvements, so I just had to try

another time.

Digging through the stash I found four balls of Drops Alpaca in two

shades of grey: I had bought it with the intent to test its durability

in somewhat more demanding situations (such as gloves or even socks),

but then the LYS

I m glad that I did it, however, as it s still soft and warm, but now

also looks nicer.

The pattern is of course online as #FreeSoftWear on

I m glad that I did it, however, as it s still soft and warm, but now

also looks nicer.

The pattern is of course online as #FreeSoftWear on  This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

By the influencers on the famous proprietary video platform

By the influencers on the famous proprietary video platform Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a  I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

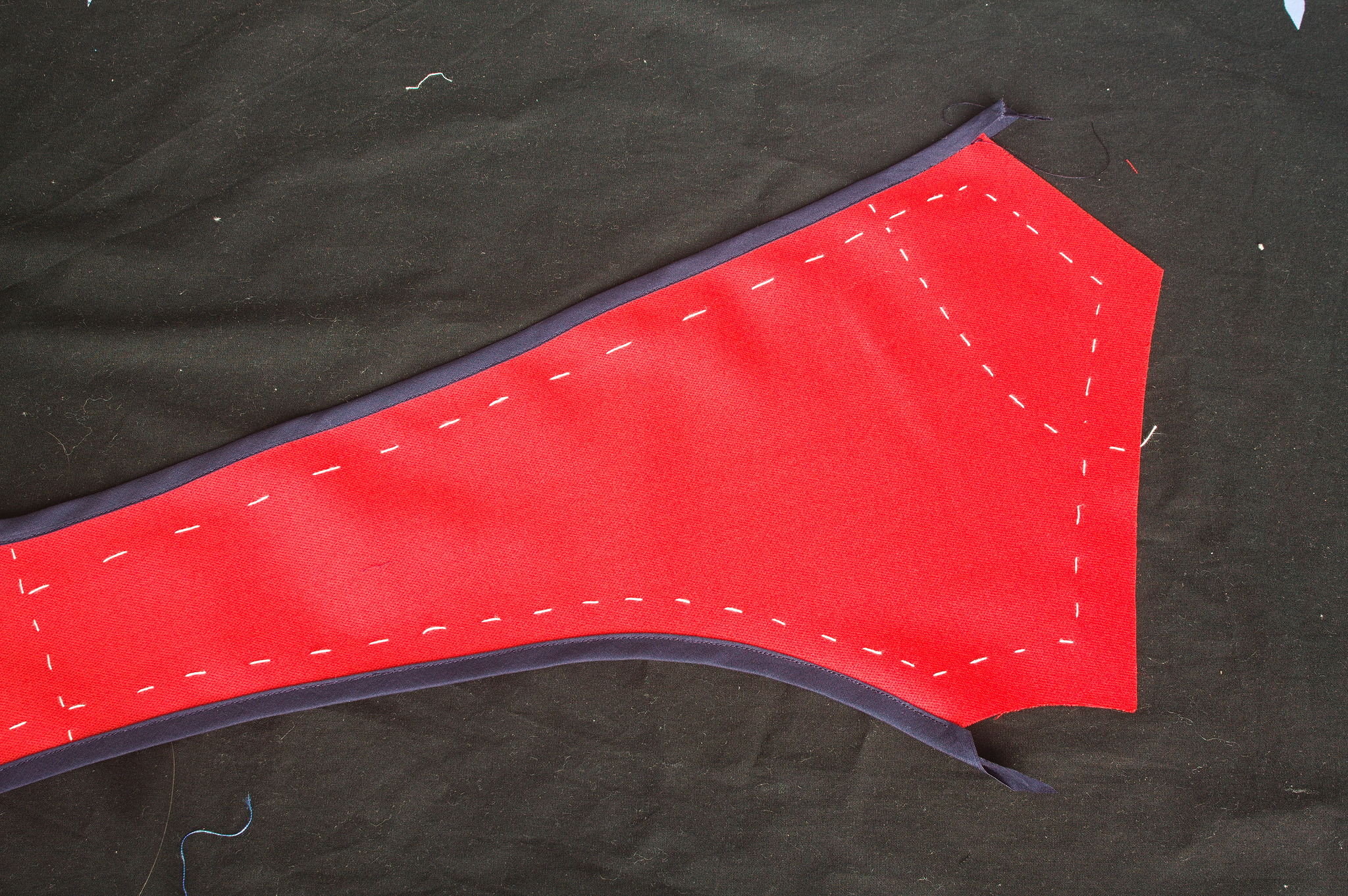

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

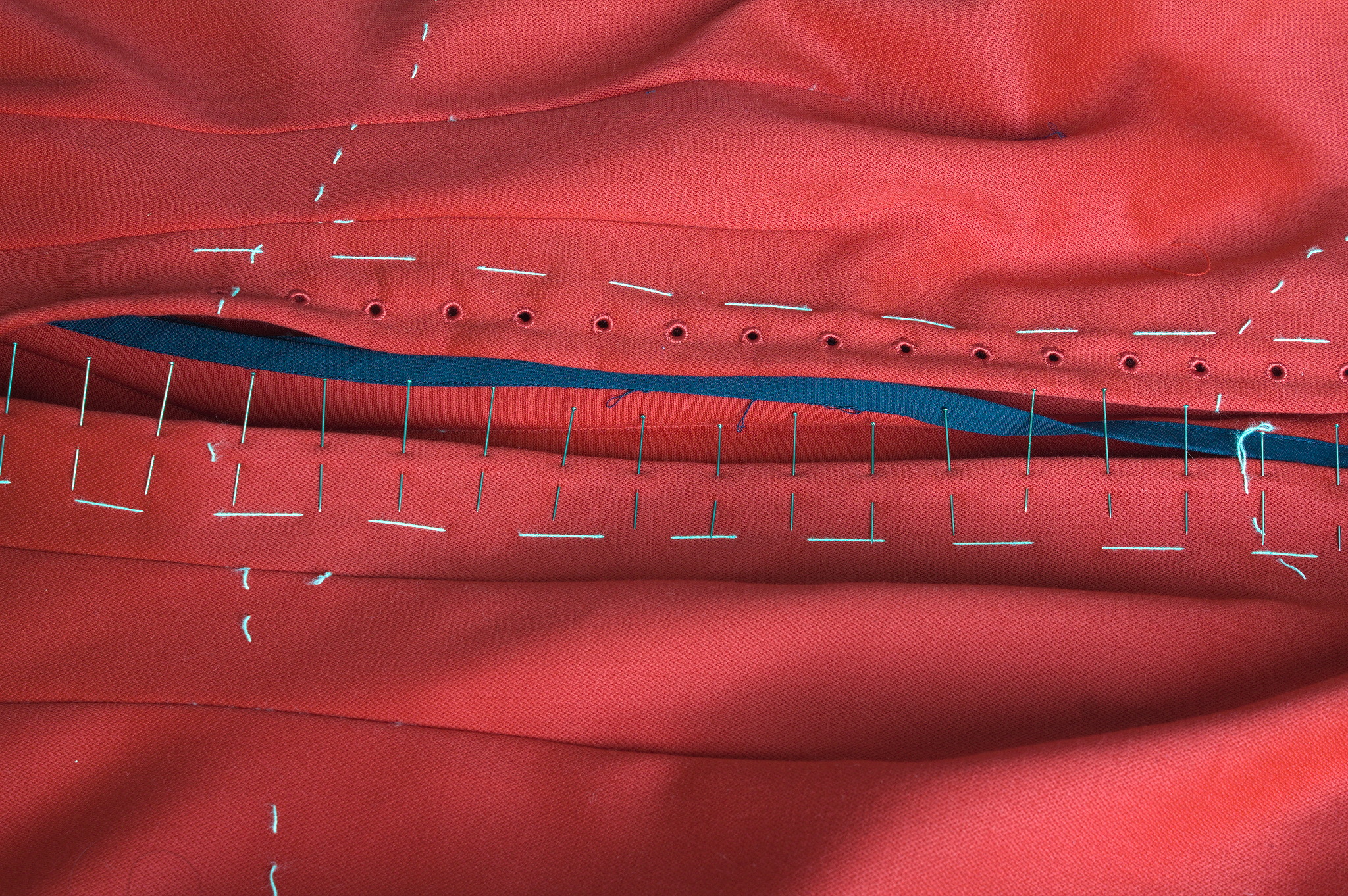

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

Debian Public Statement about the EU Cyber Resilience Act and the Product Liability Directive

The European Union is currently preparing a regulation "on horizontal

cybersecurity requirements for products with digital elements" known as the

Cyber Resilience Act (CRA). It is currently in the final "trilogue" phase of

the legislative process. The act includes a set of essential cybersecurity and

vulnerability handling requirements for manufacturers. It will require products

to be accompanied by information and instructions to the user. Manufacturers

will need to perform risk assessments and produce technical documentation and,

for critical components, have third-party audits conducted. Discovered security

issues will have to be reported to European authorities within 25 hours (1).

The CRA will be followed up by the Product Liability Directive (PLD) which will

introduce compulsory liability for software.

While a lot of these regulations seem reasonable, the Debian project believes

that there are grave problems for Free Software projects attached to them.

Therefore, the Debian project issues the following statement:

Debian Public Statement about the EU Cyber Resilience Act and the Product Liability Directive

The European Union is currently preparing a regulation "on horizontal

cybersecurity requirements for products with digital elements" known as the

Cyber Resilience Act (CRA). It is currently in the final "trilogue" phase of

the legislative process. The act includes a set of essential cybersecurity and

vulnerability handling requirements for manufacturers. It will require products

to be accompanied by information and instructions to the user. Manufacturers

will need to perform risk assessments and produce technical documentation and,

for critical components, have third-party audits conducted. Discovered security

issues will have to be reported to European authorities within 25 hours (1).

The CRA will be followed up by the Product Liability Directive (PLD) which will

introduce compulsory liability for software.

While a lot of these regulations seem reasonable, the Debian project believes

that there are grave problems for Free Software projects attached to them.

Therefore, the Debian project issues the following statement: